Fedorenko, E., Ivanova, A.A. & Regev, T.I. The language network as a natural kind within the broader landscape of the human brain. Nat. Rev. Neurosci. 25, 289–312 (2024).

Large language models (LLMs) exhibit remarkable capabilities on not just language tasks, but also various tasks that are not linguistic in nature, such as logical reasoning and social inference. In the human brain, neuroscience has identified a core language system that selectively and causally supports language processing. We here ask whether similar specialization for language emerges in LLMs. We identify language-selective units within 18 popular LLMs, using the same localization approach that is used in neuroscience. We then establish the causal role of these units by demonstrating that ablating LLM language-selective units -- but not random units -- leads to drastic deficits in language tasks. Correspondingly, language-selective LLM units are more aligned to brain recordings from the human language system than random units. Finally, we investigate whether our localization method extends to other cognitive domains: while we find specialized networks in some LLMs for reasoning and social capabilities, there are substantial differences among models. These findings provide functional and causal evidence for specialization in large language models, and highlight parallels with the functional organization in the brain.

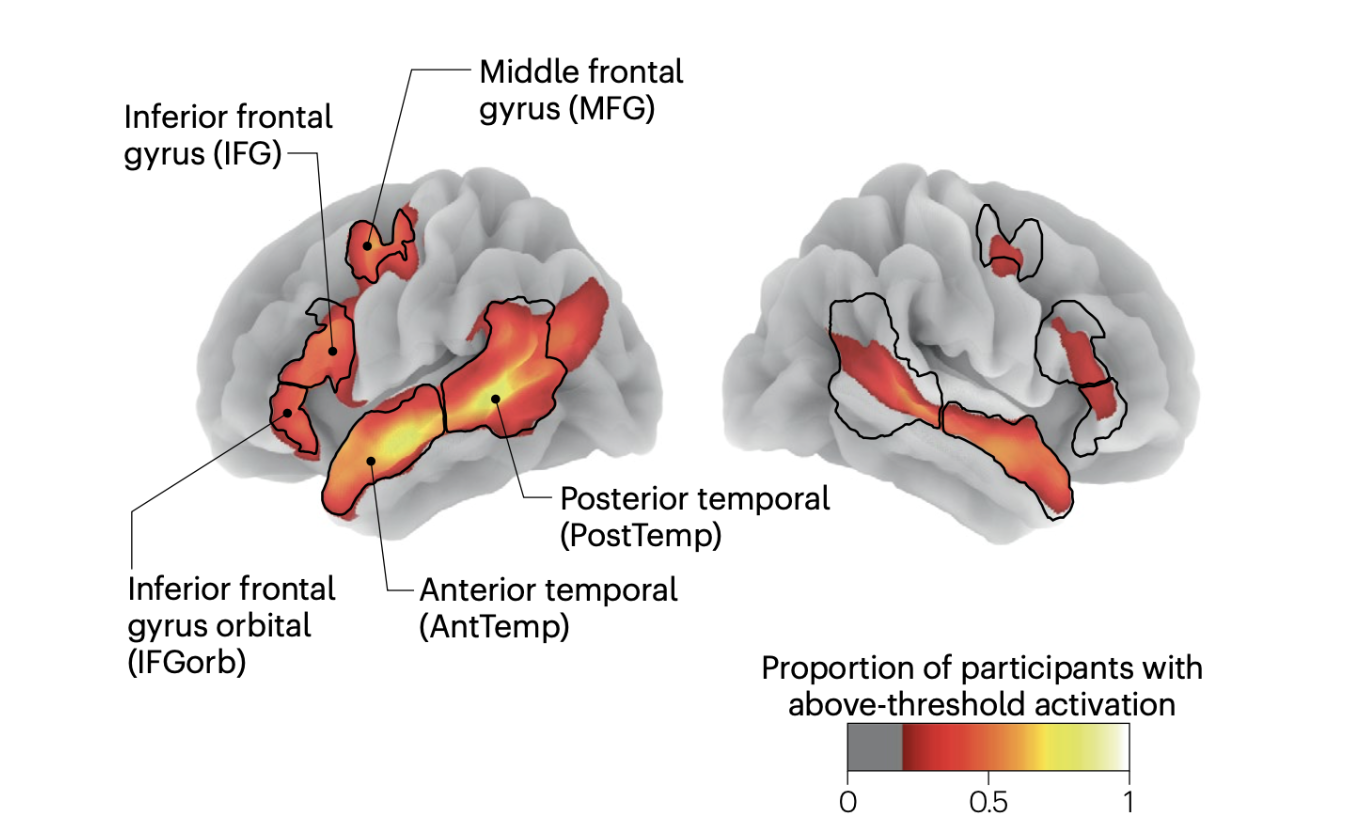

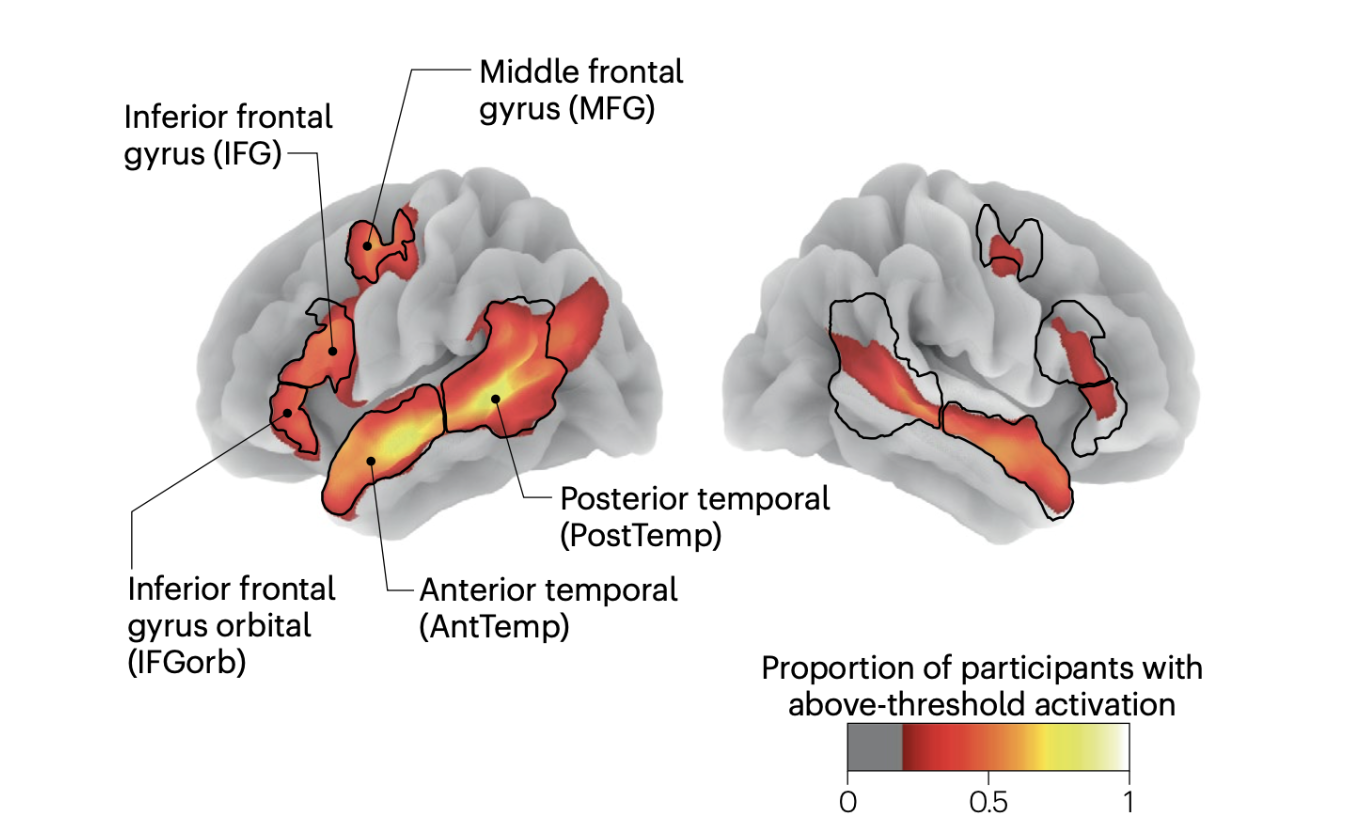

Fedorenko, E., Ivanova, A.A. & Regev, T.I. The language network as a natural kind within the broader landscape of the human brain. Nat. Rev. Neurosci. 25, 289–312 (2024).

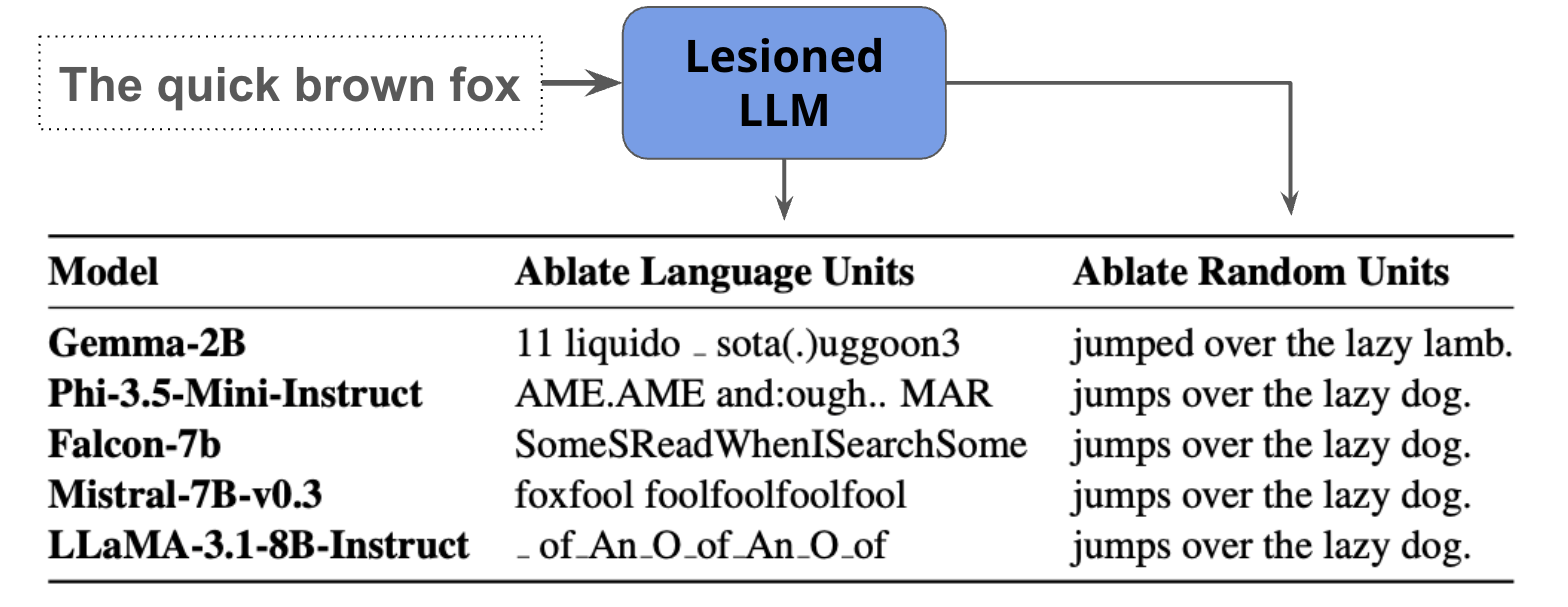

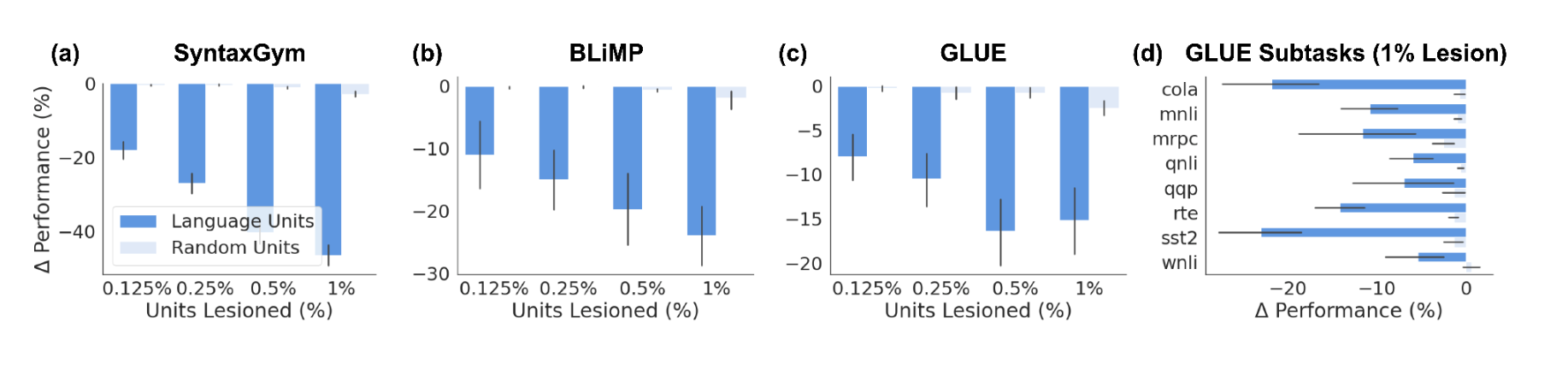

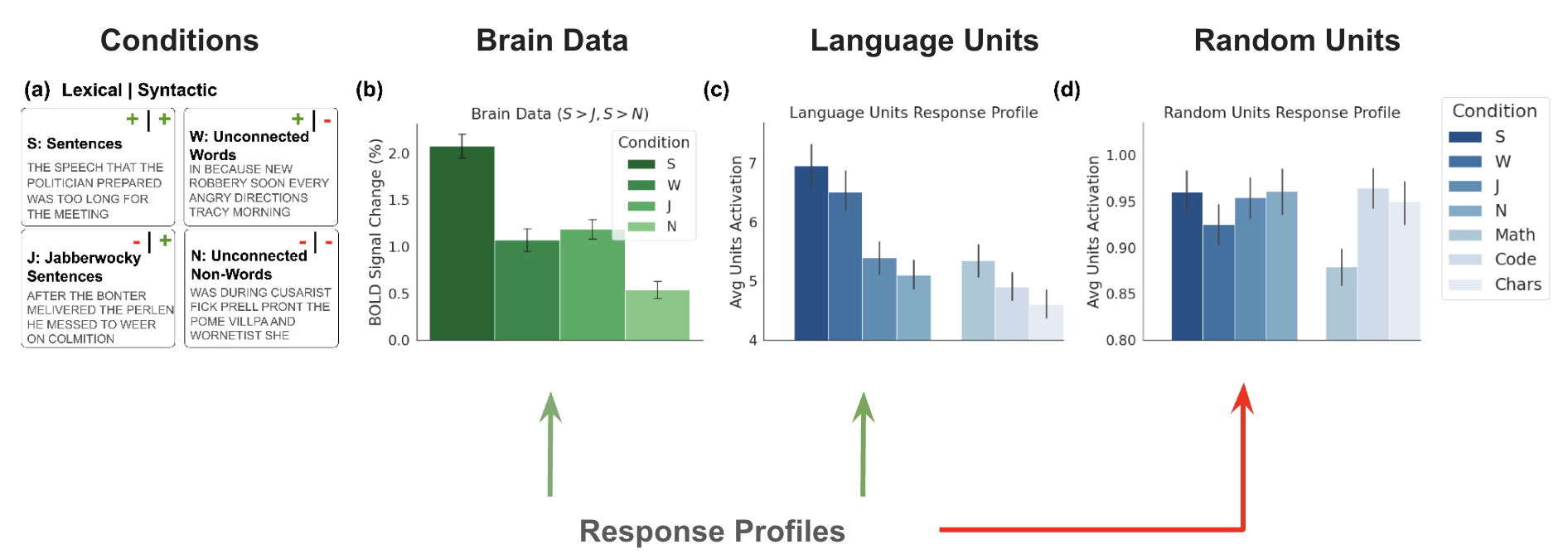

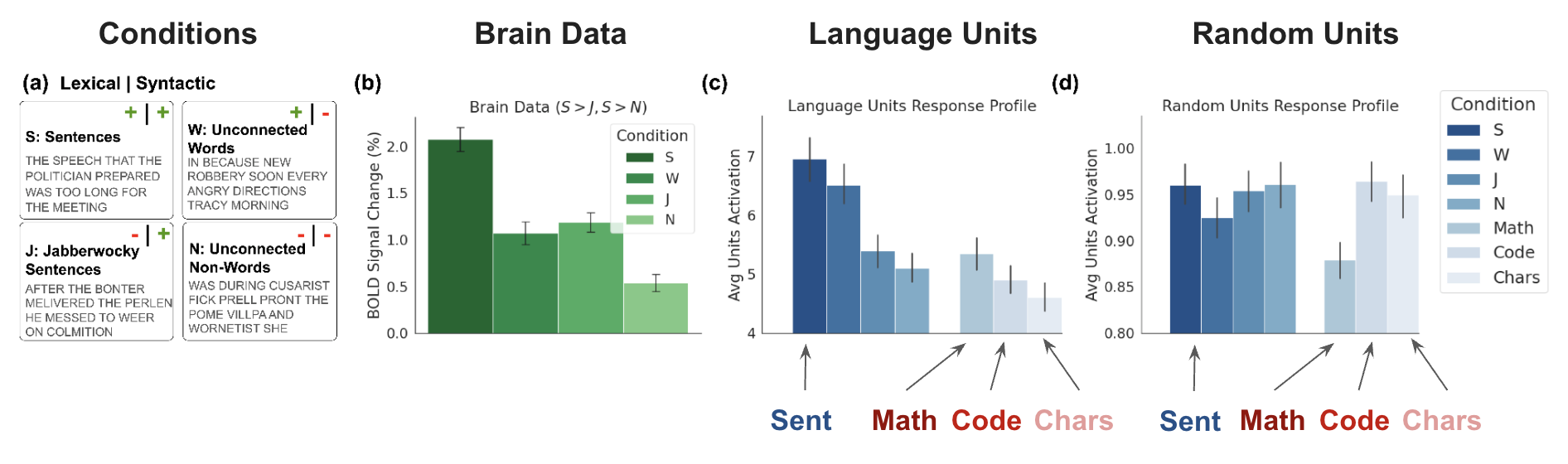

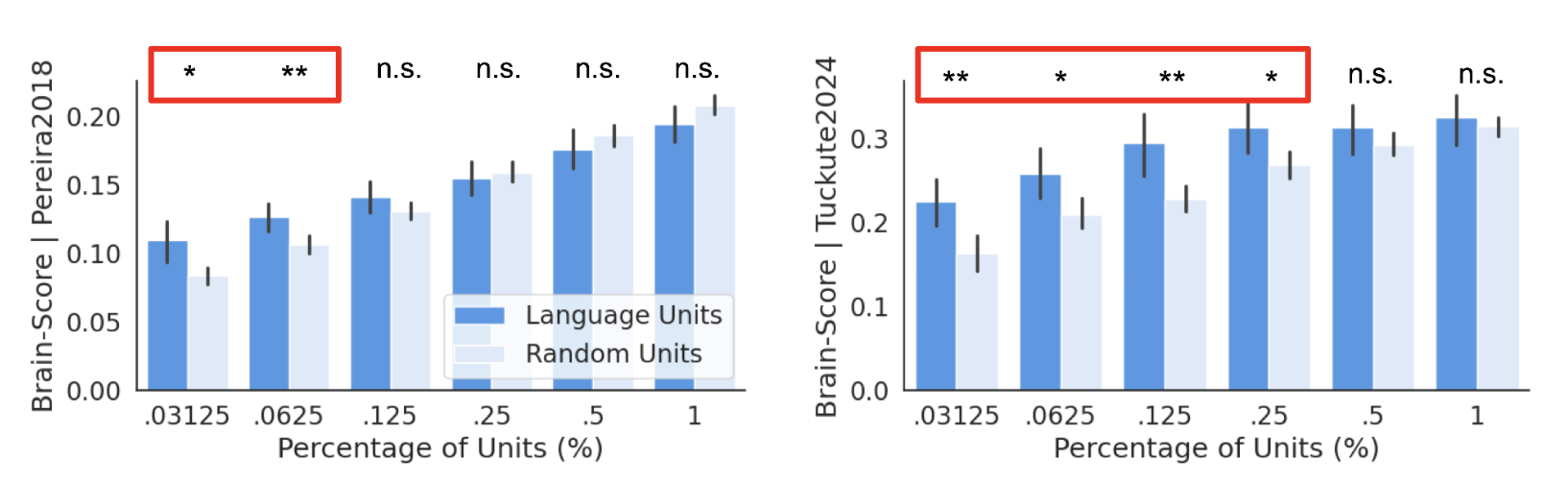

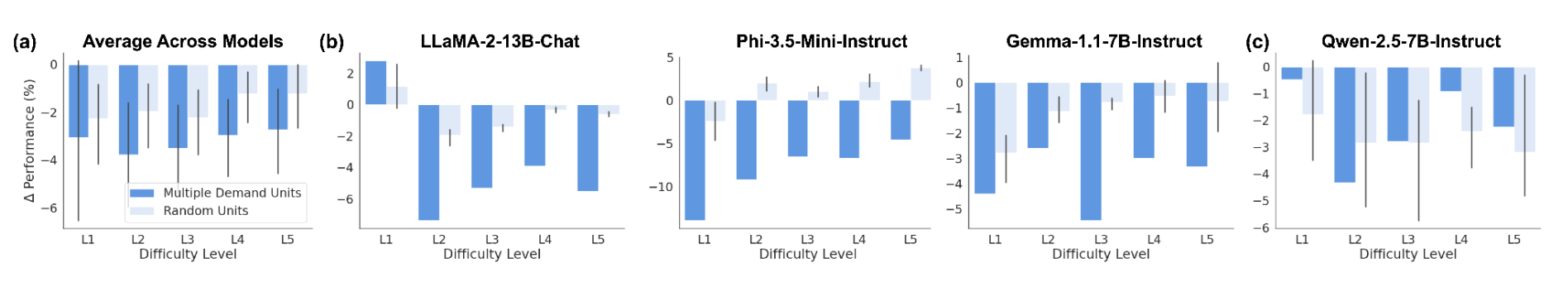

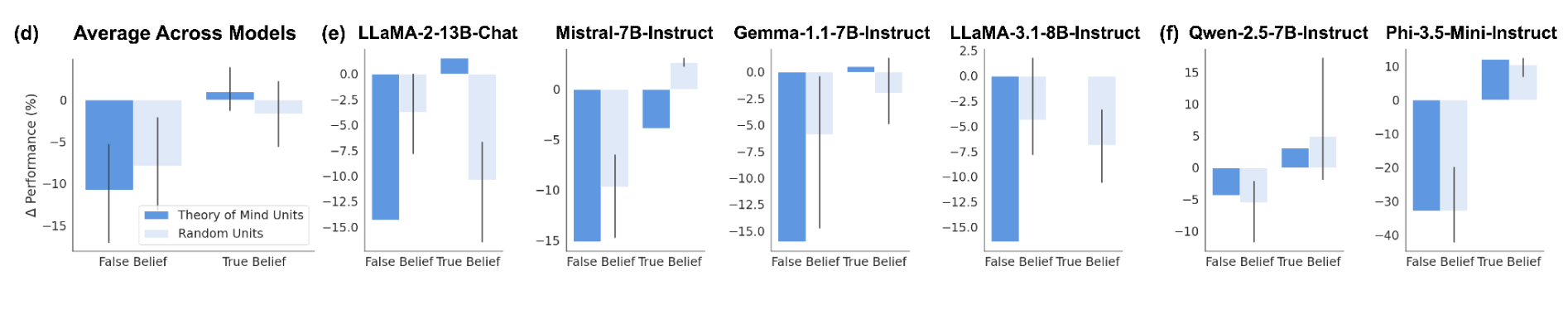

We explore whether functional specialization observed in the human brain can be identified in LLMs. Drawing inspiration from neuroscience, we applied the same localizers used in neuroscience, to uncover language-selective units within LLMs, showing that a small subset of these units are crucial for language modeling. Our lesion studies revealed that ablating even a fraction of these units leads to significant drops in language performance across multiple benchmarks, while randomly sampled non-language units had no comparable effect. Although we successfully identified a language network analog in all models studied, we found mixed results when applying similar localization techniques to Theory of Mind and Multiple Demand networks, suggesting that not all cognitive functions neatly map onto current LLMs. These findings provide new insights into the internal structure of LLMs and open up avenues for further exploration of parallels between artificial systems and the human brain.

Finding I: Ablating 1% of LLM "language network" units disrups next-word prediction performance (and performance on various other linguistic benchmarks).

Finding II: Language units exhibit similar response profiles as the human language network

Finding III: Language units are selective for language as the human language network

Finding IV: Language units can better predict brain activity

Finding V: Lesioning multiple demand units affect mathematical reasoning benchmarks on some models and not others, same for theory of mind units on a theory of mind benchmark

@inproceedings{alkhamissi-etal-2025-llm-language-network,

title = "The {LLM} Language Network: A Neuroscientific Approach for Identifying Causally Task-Relevant Units",

author = "AlKhamissi, Badr and

Tuckute, Greta and

Bosselut, Antoine and

Schrimpf, Martin",

editor = "Chiruzzo, Luis and

Ritter, Alan and

Wang, Lu",

booktitle = "Proceedings of the 2025 Conference of the Nations of the Americas Chapter of the Association for Computational Linguistics: Human Language Technologies (Volume 1: Long Papers)",

month = apr,

year = "2025",

address = "Albuquerque, New Mexico",

publisher = "Association for Computational Linguistics",

url = "https://aclanthology.org/2025.naacl-long.544/",

doi = "10.18653/v1/2025.naacl-long.544",

pages = "10887--10911",

ISBN = "979-8-89176-189-6",

}

This website is adapted from LLaVA-VL, Nerfies, and VL-RewardBench, licensed under a Creative Commons Attribution-ShareAlike 4.0 International License.